[from an email to friends on 13 Aug 2003]

Okay, so the problem with all these ‘eye-candy’ music visualization programs

(stand-alone or part of iTunes, Winamp, XMMS, etc.) is that they have to go

thru the process of sleuthing out interesting ‘events’ in the audio, in

real-time, to act as triggers and modifiers for the visuals.

As we’ve all seen this is pretty hard (because the results mostly suck)

and they usually just devolve into the “twirly oscillosope with color fade”

effect. The time difference between audio and video signal must be very

small to be not noticable, meaning there’s not much time for real-time

analysis.

If instead there existed a visualizer that used a pre-computed ‘event track’

for a song, the visualizer could spend its time doing much more interesting

and meaningful things besides FFTs and such. And let’s say that there exists,

CDDB-style, various network- or disk-available caches of these ‘event tracks’

that the visualizer would search for before displaying pretty pictures.

Soon a whole community exists that creates and submits these event tracks

exists, just like how CDDB avalanched into usefulness because of the network

effects of having more CDs in its database.

What are these ‘event tracks’? At its simplest, I was imagining something

small (32 bits, one bit for a frequency band (each 1/4 octave wide say))

for each 100ms chunk of audio. Perhaps later we create extended events that

indicate something very high-level like ‘intro’, ‘verseA’, ‘bridge’,

‘chorus’, ‘break’, ‘drum solo’, etc.

How do we create these ‘event tracks’? For the simplest event type,

perhaps we start out with a very good (and thus not real-time) method of

analyzing audio into the 32 bins per 100ms. That would be a start.

Maybe we create a GUI that shows the audio with semi-transparent overlays of

what, where & when the algorithm thinks the bins should be and allows users

to nudge the events, clean them up, add the higher-level events

(“the chorus starts here, the break happens here”).

You may be thinking that at some point these ‘event tracks’ start looking

like MIDI data. Hey good idea, there’s another way we could pre-generate

the higher-level events, if we’re given a sufficiently accurate MIDI track

of a song (many MIDI files exist on the Net, would be interesting to see how

accurate they are when compared against the recording)

And maybe as a value-add we include the lyrics too. Instant Karaoke with

trippy visuals!

Imagine what else one could do with an accurate ‘event track’ of a piece of

audio? Besides really nice visuals, a video-editing suite could import it as

a template for when (and what kind of) wipes or other video manipulations

should occur. Auto-editing of video! (well almost) DJs could find a use

for the events I’m sure.

Oh and how do we make money with it? Fuck if I know. Maybe we sell the

really kick-ass GUI event creation tools and leave the punters with the

command-line Perl modules. Maybe we use the event tracks as some sort of

digital signature that artists can use to determine which version of their

song gets out on the Net. (and thus who leaked it) Maybe like GPS we offer

the ‘low-res’ version to the public for free, but if you want the

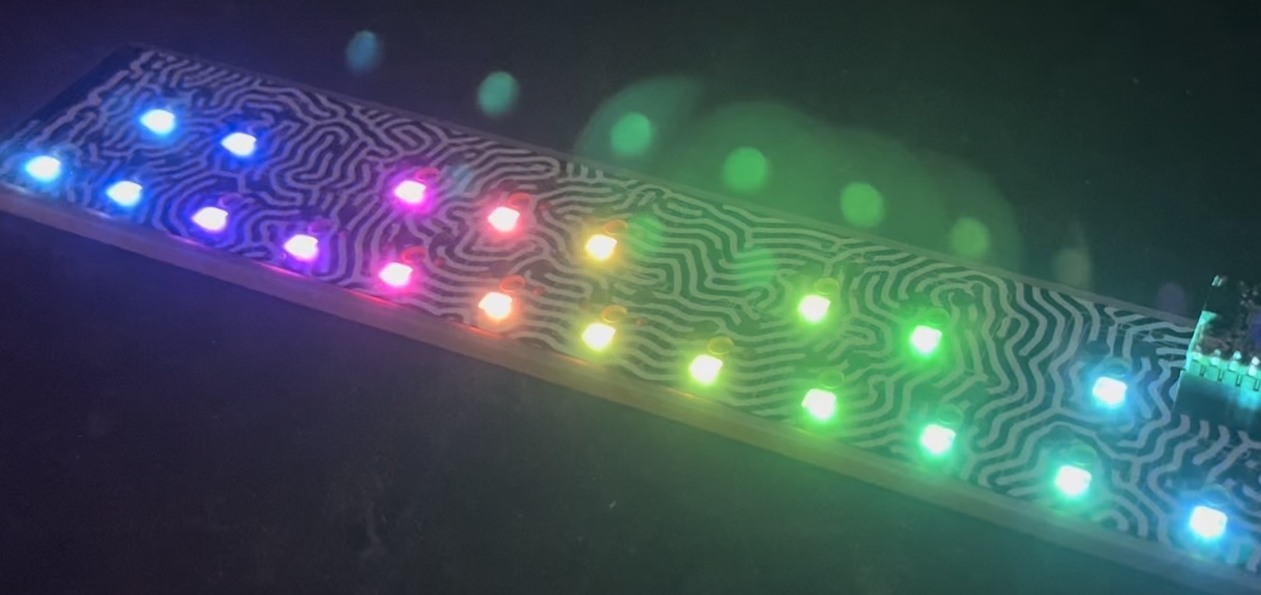

higher-res/higher-quality version (for your DMX light system you’re using for

your nightclub or theater troupe) you gotta pay. Maybe we apply a special

super-secret algo that generates only high-level rhythm events and then sell

it to music producers with “Want to make your track have the same ‘feel’ as

the latest Beastie Boys track, just apply this event template!”