At my new company ThingM, Mike and I have completed a technology sketch for WineM, a smart wine rack. Below is a video demonstration and an abstract. A full description can be found on the ThingM site. We periodically create Technology Sketches as a way to explore the ideas we’re thinking about.

Abstract:

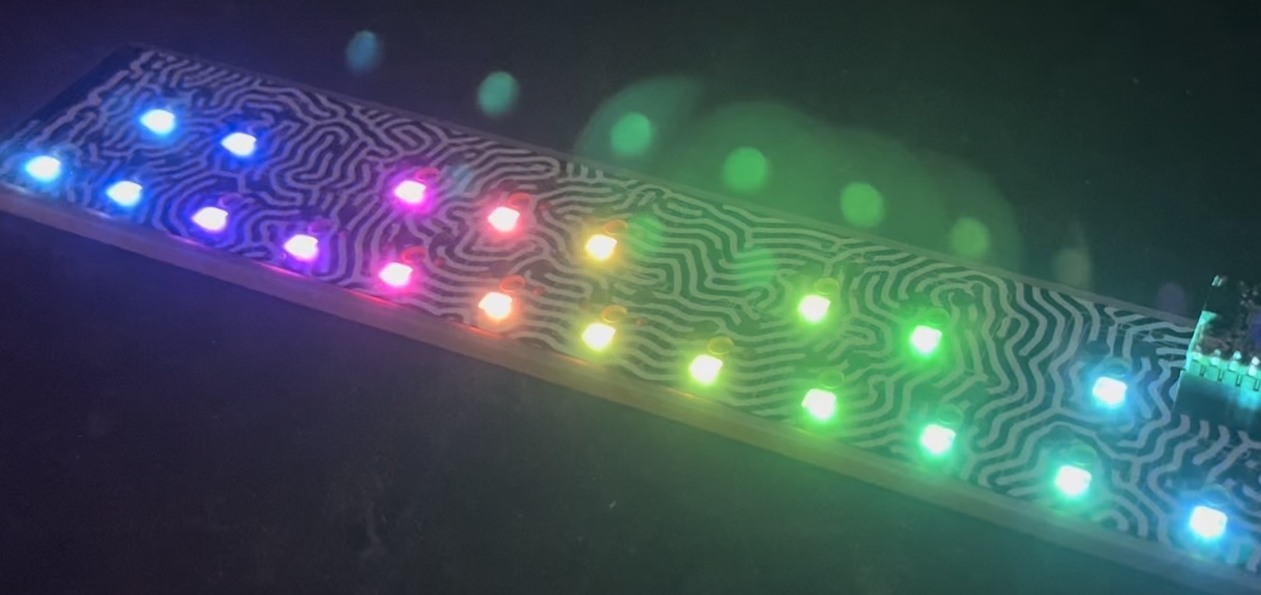

WineM is a Technology Sketch of a smart wine rack. It’s designed to locate wines in a wine rack using RFIDs attached to bottles and to display which wines have been located using LED backlights behind the bottles. Collectors (or anyone with a large wine cellar) can use it to search through collections, track the location of specific bottles and manage inventory with a minimum of data entry. Linking bottles to networked databases can provide information that would otherwise be too time consuming or difficult to obtain (for example, the total value of a collection, or all the wine that is ready to drink).